Invited Talks

Extreme PyTorch: Inside the Most Demanding ML Workloads—and the Open Challenges in Building AI Agents to Democratize Them

In this talk, we’ll explore how cutting-edge users are pushing PyTorch to its limits—from planetary-scale training on interactive supercomputers to ultra-efficient, real-time inference on exotic hardware. These power users give us a unique window into today’s most demanding ML systems challenges. We’ll also examine a bold idea that's on top of everyone's mind at this conference: using AI agents to automate a big chunk of work that these cutting-edge users currently do. This vision is far from realized. We’ll outline the open challenges in building such agents, and share concrete opportunities for open collaboration toward making SysML AI agents a reality.

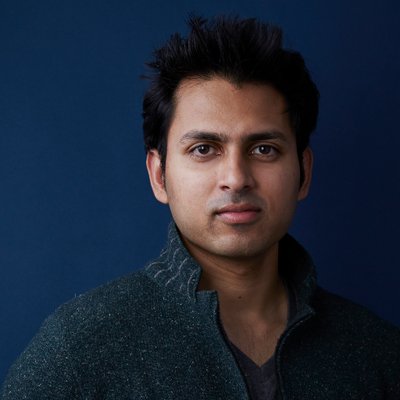

Speaker

Soumith Chintala

Soumith Chintala is a Scientist-Engineer focused on AI and Robotics, leading influential AI work such as PyTorch, DCGAN and Torch-7; work which is used by several top institutions including NASA, Meta, Google, Tesla, Microsoft, Disney, Genentech, and numerous other Fortune-500 companies and in the curriculum of top-ranked universities such as Stanford, Harvard, Oxford and MIT. He currently leads PyTorch and other AI projects at Meta, is a Visiting Professor at New York University, and maintains advisory roles at various institutions.

Lessons Learned from Successful PhD Students

This talk is for young PhD students and would be PhD students to help them understand how to have a satisfying and successful PhD in Machine Learning Systems. While it draws on my own experience in my PhD, creating such things as bitsandbytes and developing QLoRA, I will mainly draw on research about academic success and general patterns that I saw in successful PhD students to provide perspective. Key questions I will talk about: What is my research style? What should I work on? How should I work on it? How do I get the resources that I need for my work? How do I stay motivated?

Speaker

Tim Dettmers

LMArena: An Open Platform for Crowdsourced AI benchmarks

Recent advance in AI has unlocked new capabilities and applications; however, its evaluation still poses significant challenges. We introduce LMArena, an open platform for evaluating AI based on human preferences. Our methodology employs a pairwise comparison approach and leverages input from a global user base through crowdsourcing. The platform has been operational for over two years, collecting ~3 million community votes. LMArena has emerged as one of the most popular LLM leaderboards, widely referenced by leading LLM developers and companies. Our website is publicly available at https://lmarena.ai

Speaker

Wei-Lin Chiang

Designing Models from the Hardware Up

This talk presents systems-level techniques for designing language models that are both high quality and highly efficient. I’ll introduce ThunderKittens, a GPU programming library that simplifies the development of hardware-friendly models, and show how it enabled BASED—an attention-free architecture built from simple, throughput-oriented components. These innovations made it possible to train state-of-the-art 8B–405B parameter attention-free models on academic resources and have influenced emerging approaches across research, industry, and open-source.

Speaker

Simran Arora

An AI stack: from scaling AI workloads to evaluating LLMs

Large language models (LLMs) have taken the world by storm—enabling new applications, intensifying GPU shortages, and raising concerns about the accuracy of their outputs. In this talk, I will present several projects I have worked on to address these challenges. Specifically, I will focus on: (i) Ray, a distributed framework for scaling AI workloads; (ii) vLLM and SGLang, two high-throughput inference engines for LLMs; and (iii) Chatbot Arena, a platform for accurate LLM benchmarking. I will conclude with key lessons learned and outline directions for future research.

Speaker

Ion Stoica

My area of research is at the intersection between AI and systems, cloud computing, and distributed systems. I am equally interested in designing algorithms and systems with strong theoretical foundations, and in providing practical implementations that are deployable in the real world.

Hardware-aware training and inference for large-scale AI

The scaling of large language models has led to impressive gains in language understanding, but at a cost of insatiable memory and bandwidth requirements. We take a principled approach of designing optimization and quantization algorithms that can reduce memory requirements without sacrificing accuracy. This includes gradient compression methods (GaLore, SignSGD) and logarithmic number system for representation. We also design fine-grained memory reduction schemes such as KV cache compression, chunking and offloading to overcome memory bottlenecks in language models, especially in the reasoning mode where current memory requirements are massive. Such principles are broadly applicable and especially relevant to physical AI where the memory and bandwidth requirements are even greater than frontier LLMs.

Speaker

Animashree Anandkumar

Professor Anandkumar's research interests are in the areas of large-scale machine learning, non-convex optimization and high-dimensional statistics. In particular, she has been spearheading the development and analysis of tensor algorithms for machine learning. Tensor decomposition methods are embarrassingly parallel and scalable to enormous datasets. They are guaranteed to converge to the global optimum and yield consistent estimates for many probabilistic models such as topic models, community models, and hidden Markov models. More generally, Professor Anandkumar has been investigating efficient techniques to speed up non-convex optimization such as escaping saddle points efficiently.

Responsible Finetuning of Large Language Models

The human-like generative ability of Large Language Models (LLMs) has ushered in a new era of

foundational models and generative AI, unlocking new possibilities and driving cross-domain

innovations. However, the transformative potential of LLMs has been seriously challenged the

problematic hallucinations of LLMs, which may lead to misinformation, biases, harmful content,

making responsible finetuning of LLMs a grand challenge. Safety alignment of pretrained LLMs

represents an important step forward to ensure their outputs being helpful, harmless, and

honest, respecting human preferences and societal values. However, recent studies have shown

that many safety-aligned LLMs suffer from security/privacy/ethic risks of user finetuning: the

well-aligned LLMs can easily be broken and produce harmful, helpless or untruthful content in

the presence of a small amount of harmful finetuning data. In this keynote, I will discuss some

potential vulnerabilities and risks of existing safety alignment and finetuning techniques, and

share some of our recent research efforts towards developing a responsible framework and

techniques for more robust alignment/finetuning of LLMs.

Speaker

Ling Liu

Ling Liu is a Professor in the School of Computer Science at Georgia Institute of Technology. She

directs the research programs in the Distributed Data Intensive Systems Lab (DiSL), examining

various aspects of Internet-scale big data powered artificial intelligence (AI) systems, algorithms

and analytics, including performance, reliability, privacy, security and trust. Her research in the

ML systems area is mainly centered on efficient AI systems and Algorithms, as well as

trustworthy AI through developing AI security and AI privacy guardrails. Prof. Ling Liu’s current

research is primarily supported by National Science Foundation under CISE programs, CISCO and

IBM.

No Events Found

Try adjusting your search terms

Successful Page Load